🥟 Chao-Down #361 Hollywood's growing divide on AI, White House says no to restricting open-source AI, The US Air Force looks towards robot pilots for unmanned jets

Plus, LLMs are found to excel at particular types of reasoning but struggles on others.

A big debate happening among AI circles is how well large language models are capable of reasoning. But what does reasoning even mean?

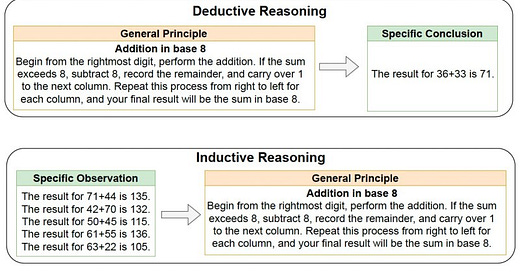

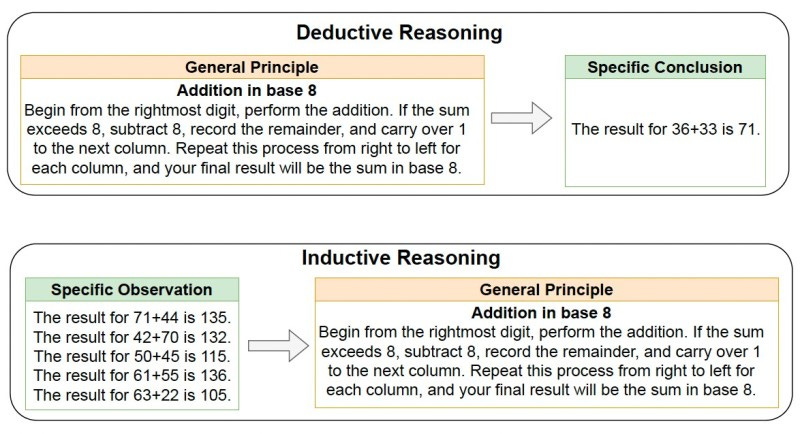

In a study by UCLA and Amazon, researchers distinguished between two types of reasoning: inductive (bottoms-up, learning from examples) and deductive (top-down, applying general rules and principles). What they found was that LLMs perform well on tasks requiring pattern recognition (inductive reasoning) but poorly on tasks that needed strict rule-following.

While many (myself included) are seeking to build increasingly complex systems using LLMs as a backbone, the research suggests that to get the best results, people need to provide a lot of worked out examples instead of relying on any inherent reasoning capability from the model.

How are you all using LLMs?

-Alex, your resident Chaos Coordinator.

What happened in AI? 📰

OpenAI won’t watermark ChatGPT text because its users could get caught (The Verge)

LLMs excel at inductive reasoning but struggle with deductive tasks, new research shows (VentureBeat)

Hollywood's Divide on Artificial Intelligence Is Only Growing (hollywoodreporter.com)

White House says no need to restrict 'open-source' artificial intelligence — at least for now (AP News)

U.S. Air Force is developing AI pilots called robot wingmen (qz.com)

Job seekers are getting increasingly bold by 'cheating' in interviews — and AI is making it worse (msn.com)

Always be Learnin’ 📕 📖

The Senior Engineer Illusion: What I Thought vs. What I Learned (mensurdurakovic.com)

The expanded scope and blurring boundaries of AI-powered design (UX Collective)

How to Prove the ROI of AI - GTMonday by GTM Partners (substack.com)

Projects to Keep an Eye On 🛠

microsoft/MInference: To speed up Long-context LLMs' inference, approximate and dynamic sparse calculate the attention, which reduces inference latency by up to 10x for pre-filling on an A100 while maintaining accuracy. (Github)

OpenAutoCoder/Agentless: Agentless🐱: an agentless approach to automatically solve software development problems (Github)

apple/ToolSandbox- A Stateful, Conversational, Interactive Evaluation Benchmark for LLM Tool Use Capabilities (Githuub)

The Latest in AI Research 💡

AI Agents That Matter (arxiv)

Extracting Prompts by Inverting LLM Outputs (arxiv)

Questionable practices in machine learning (arxiv)

The World Outside of AI 🌎

Why do I feel hungover the day after crying or feeling stressed? (Vox)

Why Pepsi, Junk Food Makers Should Shift to Fresh Produce (Bloomberg)

Effective altruism is stumbling. Can "moral ambition" replace it? (Big Think)

Scientists are falling victim to deepfake AI video scams — here’s how to fight back (Nature)

The big idea: why your brain needs other people (The Guardian)

DOJ Considers Seeking Google (GOOG) Breakup After Major Antitrust Win - (Bloomberg)